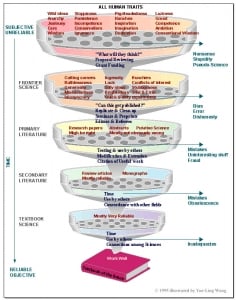

It has been noted by many that the Wikipedia Cold Fusion article is not a good source for those seeking information on the art of this science. The Wiki article quibbles as to whether cold fusion research is actually science. The Wiki article also does not recognize the peer review process of LENR-CANR.org or other cold fusion science journals; seeing them as publications by a group of self promoting crackpot scientists, deluding us and each other with dreams of infinite energy akin to perpetual motion, i.e. pseudoscience. This limits valid source material, turning Wiki Cold Fusion into a battle ground and a poor encyclopedic science article with a very low Wiki rating.

To get to the heart of this matter, we will go beyond the surface of the field of battle at the Wiki cold fusion article and find, there in the depths of Wikipedia, the workings of the science behind the clean low energy nuclear reaction environment; now emerging into the marketplace as popular ‘cold fusion’ LENR energy.

It is heartening to find, in Wikipedia, science that challenges known theory; and which confirms the science and the physics surrounding the low energy nuclear reaction. Here we have proof that the coverage of cutting edge cold fusion research has been sorely mistreated by the senior Wiki editors who ride that post. Explore the depths of Wiki science and find that nowhere else is cutting edge research which challenges known theory thrown into such a battleground of contention, as is found at the Wikipedia article about Cold Fusion… Now, why with recent developments is this so?

Explore Key Words at Wiki From This Cold Fusion (LENR) Patent

“Method for Producing Heavy Electrons”, NASA LENR Patent (USPTO link)

Surface plasmons (SPs), Surface plasmon polaritons (SPPs), Resonant frequency, Heavy electrons, Metal hydride, Fractal geometry, Energy, Unconventional superconductivity, Weak antiferromagnetism, Pseudo metamagnetism, Hydrogenated/deuterated molecular structures such as graphane and its nanotube variants, Quasi-crystalline arrays, Metamaterials, Dusty plasmas

Surface plasmons (SPs) are coherent electron oscillations that exist at the interface between any two materials where the real part of the dielectric function changes sign across the interface (e.g. a metal-dielectric interface, such as a metal sheet in air). SPs have lower energy than bulk (or volume) plasmons which quantise the longitudinal electron oscillations about positive ion cores within the bulk of an electron gas (or plasma). The existence of surface plasmons was first predicted in 1957 by Rufus Ritchie. In the following two decades, surface plasmons were extensively studied by many scientists, the foremost of whom were T. Turbadar in the 1950s and 1960s, and Heinz Raether, E. Kretschmann, and A. Otto in the 1960s and 1970s. Information transfer in nanoscale structures, similar to photonics, by means of surface plasmons, is referred to as plasmonics. Surface plasmons can be excited by both electrons and photons. (Wiki)

Surface plasmon polaritons (SPPs), are infrared or visible frequency electromagnetic waves trapped at or guided along metal-dielectric interfaces. These are shorter in wavelength than the incident light (photons). Hence, SPPs can provide a significant reduction in effective wavelength and a corresponding significant increase in spatial confinement and local field intensity. Collective charge oscillations at the boundary between an insulating dielectric medium (such as air or glass) and a metal (such as gold, silver or copper) are able to sustain the propagation of infrared or visible frequency electromagnetic waves known as surface plasmon-polaritons (SPP). SPPs are guided along metal-dielectric interfaces much in the same way light can be guided by an optical fiber, with the unique characteristic of subwavelength-scale confinement perpendicular to the interface. Surface plasmons (not SPPs), occur as light induced packets of electrical charges collectively oscillate at the surfaces of metals at optical frequencies.

Under specific conditions, the light that radiates the object (incident light) couples with the surface plasmons to create self-sustaining, propagating electromagnetic waves known as surface plasmon polaritons (SPPs). Once launched, the SPPs ripple along the metal-dielectric interface and do not stray from this narrow path. Compared with the incident light that triggered the transformation, the SPPs can be much shorter in wavelength. In other words, when SPs couple with a photon, the resulting hybridised excitation is called a surface plasmon polariton (SPP). This SPP can propagate along the surface of a metal until energy is lost either via absorption in the metal or radiation into free-space. (Wiki)

Resonant frequencies In physics, resonance is the tendency of a system to oscillate with greater amplitude at some frequencies than at others. Frequencies at which the response amplitude is a relative maximum are known as the system’s resonant frequencies, or resonance frequencies. At these frequencies, even small periodic driving forces can produce large amplitude oscillations, because the system stores vibrational energy. Resonance occurs when a system is able to store and easily transfer energy between two or more different storage modes (such as kinetic energy and potential energy in the case of a pendulum). Resonance phenomena occur with all types of vibrations or waves: there is mechanical resonance, acoustic resonance, electromagnetic resonance, nuclear magnetic resonance (NMR), electron spin resonance (ESR), and resonance of quantum wave functions. (Wiki)

Muons (mu mesons aka heavy electrons) Muons are denoted by μ− and antimuons by μ+. Muons were previously called mu mesons, but are not classified as mesons by modern particle physicists (see History). Muons have a mass of 105.7 MeV/c2, which is about 200 times the mass of an electron. Since the muon’s interactions are very similar to those of the electron, a muon can be thought of as a much heavier version of the electron. The eventual recognition of the “mu meson” muon as a simple “heavy electron” with no role at all in the nuclear interaction, seemed so incongruous and surprising at the time, that Nobel laureate I. I. Rabi famously quipped, “Who ordered that?” Muonic helium is created by substituting a muon for one of the electrons in helium-4. The muon orbits much closer to the nucleus, so muonic helium can therefore be regarded like an isotope of hydrogen whose nucleus consists of two neutrons, two protons and a muon, with a single electron outside. Colloquially, it could be called “hydrogen 4.1”, since the mass of the muon is roughly 0.1 au. Chemically, muonic helium, possessing an unpaired valence electron, can bond with other atoms, and behaves more like a hydrogen atom than an inert helium atom. A positive muon, when stopped in ordinary matter, can also bind an electron and form an exotic atom known as muonium (Mu) atom, in which the muon acts as the nucleus. The positive muon, in this context, can be considered a pseudo-isotope of hydrogen with one ninth of the mass of the proton. Because the reduced mass of muonium, and hence its Bohr radius, is very close to that of hydrogen, this short-lived “atom” behaves chemically — to a first approximation — like hydrogen, deuterium and tritium. Since the production of muons requires an available center of momentum frame energy of 105.7 MeV, neither ordinary radioactive decay events nor nuclear fission and fusion events (such as those occurring in nuclear reactors and nuclear weapons) are energetic enough to produce muons. Only nuclear fission produces single-nuclear-event energies in this range, but does not produce muons as the production of a single muon is possible only through the weak interaction, which does not take part in a nuclear fission. (Wiki)

Metal hydrides Complex metal hydrides are salts wherein the anions contain hydrides. In the older chemical literature as well as contemporary materials science textbooks, a “metal hydride” is assumed to be nonmolecular, i.e. three-dimensional lattices of atomic ions. In such systems, hydrides are often interstitial and nonstoichiometric, and the bonding between the metal and hydrogen atoms is significantly ionic. In contrast, complex metal hydrides typically contain more than one type of metal or metalloid and may be soluble but invariably react with water. (Wiki)

Fractal Geometry One often cited description that Mandelbrot published to describe geometric fractals is “a rough or fragmented geometric shape that can be split into parts, each of which is (at least approximately) a reduced-size copy of the whole”; this is generally helpful but limited. Authorities disagree on the exact definition of fractal, but most usually elaborate on the basic ideas of self-similarity and an unusual relationship with the space a fractal is embedded in. One point agreed on is that fractal patterns are characterized by fractal dimensions, but whereas these numbers quantify complexity (i.e., changing detail with changing scale), they neither uniquely describe nor specify details of how to construct particular fractal patterns. Multifractal scaling: characterized by more than one fractal dimension or scaling rule. Fine or detailed structure at arbitrarily small scales. A consequence of this structure is fractals may have emergent properties; irregularity locally and globally that is not easily described in traditional Euclidean geometric language. (Wiki)

Energy In physics, energy is an indirectly observed quantity which comes in many forms, such as kinetic energy, potential energy, radiant energy, and many others; which are listed in this summary article. This is a major topic in science and technology and this article gives an overview of its major aspects, and provides links to the many specific articles about energy in its different forms and contexts. The question “what is energy?” is difficult to answer in a simple, intuitive way, although energy can be rigorously defined in theoretical physics. In the words of Richard Feynman, “It is important to realize that in physics today, we have no knowledge what energy is. We do not have a picture that energy comes in little blobs of a definite amount.” Whenever physical scientists discover that a certain phenomenon appears to violate the law of energy conservation, new forms may be added, as is the case with dark energy, a hypothetical form of energy that permeates all of space and tends to increase the rate of expansion of the universe. (Wiki)

Unconventional Superconductors are materials that display superconductivity which does not conform to either the conventional BCS theory or the Nikolay Bogolyubov’s theory or its extensions. After more than twenty years of intensive research the origin of high-temperature superconductivity is still not clear, but it seems that instead of electron-phonon attraction mechanisms, as in conventional superconductivity, one is dealing with genuine electronic mechanisms (e.g. by antiferromagnetic correlations), and instead of s-wave pairing, d-waves are substantial. One goal of all this research is room-temperature superconductivity . A room-temperature superconductor is a hypothetical material which would be capable of exhibiting superconductivity at operating temperatures above 0° C (273.15 K). While this is not strictly “room temperature” (which would be approx. 20–25 °C), it is the temperature at which ice forms and can be reached and maintained easily in an everyday environment. At present, the highest temperature superconducting materials are the cuprates, which have demonstrated superconductivity at atmospheric pressure at temperatures as high as -135 °C (138 K). It is unknown whether any material exhibiting room-temperature superconductivity exists. The interest in its discovery arises from the repeated discovery of superconductivity at temperatures previously unexpected or held to be impossible. The potential benefits for society and science if such a material did exist are profound. (Wiki)

Weak antiferromagnetism One of the fundamental properties of an electron (besides that it carries charge) is that it has a dipole moment, i.e., it behaves itself as a tiny magnet. This dipole moment comes from the more fundamental property of the electron that it has quantum mechanical spin. The quantum mechanical nature of this spin causes the electron to only be able to be in two states, with the magnetic field either pointing “up” or “down” (for any choice of up and down). The spin of the electrons in atoms is the main source of ferromagnetism, although there is also a contribution from the orbital angular momentum of the electron about the nucleus. When these tiny magnetic dipoles are aligned in the same direction, their individual magnetic fields add together to create a measurable macroscopic field. However, in materials with a filled electron shell, the total dipole moment of the electrons is zero because the spins are in up/down pairs. Only atoms with partially filled shells (i.e., unpaired spins) can have a net magnetic moment, so ferromagnetism only occurs in materials with partially filled shells. Because of Hund’s rules, the first few electrons in a shell tend to have the same spin, thereby increasing the total dipole moment. These unpaired dipoles (often called simply “spins” even though they also generally include angular momentum) tend to align in parallel to an external magnetic field, an effect called paramagnetism. Ferromagnetism involves an additional phenomenon, however: The dipoles tend to align spontaneously, giving rise to a spontaneous magnetization, even when there is no applied field. Diamagnetism Diamagnetism is a magnetic response shared by all substances. In response to an applied magnetic field, electrons precess (see Larmor precession), and by Lenz’s law they act to shield the interior of a body from themagnetic field. Thus, the moment produced is in the opposite direction to the field and the susceptibility is negative. This effect is weak but independent of temperature. A substance whose only magnetic response is diamagnetism is called a diamagnet. Paramagnetism Paramagnetism is a weak positive response to a magnetic field due to rotation of electron spins. Paramagnetism occurs in certain kinds of iron-bearing minerals because the iron contains an unpaired electron in one of their shells (see Hund’s rules). Some are paramagnetic down to absolute zero and their susceptibility is inversely proportional to the temperature (see Curie’s law); others are magnetically ordered below a critical temperature and the susceptibility increases as it approaches that temperature (see Curie-Weiss law). Ferromagnetism Collectively, strongly magnetic materials are often referred to as ferromagnets. However, this magnetism can arise as the result of more than one kind of magnetic order. In the strict sense, ferromagnetism refers to magnetic ordering where neighboring electron spins are aligned by the exchange interaction. Below a critical temperature called the Curie temperature, ferromagnets have a spontaneous magnetization and there is hysteresis in their response to a changing magnetic field. Most importantly for rock magnetism, they have remanence, so they can record the Earth’s field. Iron does not occur widely in its pure form. It is usually incorporated into iron oxides, oxyhydroxides and sulfides. In these compounds, the iron atoms are not close enough for direct exchange, so they are coupled by indirect exchange or superexchange. The result is that the crystal lattice is divided into two or more sublattices with different moments. Ferrimagetism Ferrimagnets have two sublattices with opposing moments. One sublattice has a larger moment, so there is a net unbalance. Ferrimagnets often behave like ferromagnets, but the temperature dependence of their spontaneous magnetization can be quite different. Louis Néel identified four types of temperature dependence, one of which involves a reversal of the magnetization. This phenomenon played a role in controversies over marine magnetic anomalies. Antiferromagnetism Antiferromagnets, like ferrimagnets, have two sublattices with opposing moments, but now the moments are equal in magnitude. If the moments are exactly opposed, the magnet has no remanence. However, the moments can be tilted (spin canting), resulting in a moment nearly at right angles to the moments of the sublattices. (Wiki)

Metamagnetism is a blanket term used loosely in physics to describe a sudden (often, dramatic) increase in the magnetization of a material with a small change in an externally applied magnetic field. The metamagnetic behavior may have quite different physical causes for different types of metamagnets. Some examples of physical mechanisms leading to metamagnetic behavior are: Itinerant Metamagnetism – Exchange splitting of the Fermi surface in a paramagnetic system of itinerant electrons causes an energetically favorable transition to bulk magnetization near the transition to a ferromagnet or other magnetically ordered state. Antiferromagnetic Transition – Field-induced spin flips in antiferromagnets cascade at a critical energy determined by the applied magnetic field. Depending on the material and experimental conditions, metamagnetism may be associated with a first-order phase transition, a continuous phase transition at a critical point(classical or quantum), or crossovers beyond a critical point that do not involve a phase transition at all. These wildly different physical explanations sometimes lead to confusion as to what the term “metamagnetic” is referring in specific cases. (Wiki)

Graphane is a two-dimensional polymer of carbon and hydrogen with the formula unit (CH)n where n is large. Graphane should not be confused with graphene, a two-dimensional form of carbon alone. Graphane is a form of hydrogenated graphene. Graphane’s carbon bonds are in sp3 configuration, as opposed to graphene’s sp2 bond configuration, thus graphane is a two-dimensional analog of cubic diamond. The first theoretical description of graphane was reported in 2003 and its preparation was reported in 2009. Full hydrogenation from both sides of a graphene sheet results in graphane, but partial hydrogenation leads to hydrogenated graphene. If graphene rests on a silica surface, hydrogenation on only one side of graphene preserves the hexagonal symmetry in graphane. One-sided hydrogenation of graphene becomes possible due to the existence of ripplings. Because the latter are distributed randomly, obtained graphane is expected to be disordered material in contrast to two-sided graphane. If Annealing allows the hydrogen to disperse, reverting to graphene. Note: p-doped graphane is postulated to be a high-temperature BCS theory superconductor with a Tc above 90 K. (Wiki)

Surface Layering (quasi-crystalline arrays) Surface layering is a quasi-crystalline structure at the surfaces of otherwise disordered liquids, where atoms or molecules of even the simplest liquid are stratified into well-defined layers parallel to the surface. While in crystalline solids such atomic layers can extend periodically throughout the entire dimension of a crystal, surface layering decays rapidly away from the surface and is limited to just a few near-surface region layers. Another difference between surface layering and crystalline structure is that atoms or molecules of surface-layered liquids are not ordered in-plane, while in crystalline solids they are. Surface layering was predicted theoretically by Stuart Rice at the University of Chicago in 1983 and has been experimentally discovered by Peter Pershan (Harvard) and his group, working in collaboration with Ben Ocko (Brookhaven) and Moshe Deutsch (Bar-Ilan) in 1995 in elemental liquid mercury and liquid gallium using x-ray reflectivity techniques. More recently layering has been shown to arise from electronic properties of metallic liquids, rather than thermodynamic variables such as surface tension, since surfaces of low-surface tension metallic liquids such as liquid potassium are layered, while those of dielectric liquids such as water, are not. (Wiki)

Metamaterials are artificial materials engineered to have properties that may not be found in nature. They are assemblies of multiple individual elements fashioned from conventional microscopic materials such as metals or plastics, but the materials are usually arranged in periodic patterns. Metamaterials gain their properties not from their composition, but from their exactingly-designed structures. Their precise shape, geometry, size, orientation and arrangement can affect the waves of light or sound in an unconventional manner, creating material properties which are unachievable with conventional materials. These metamaterials achieve desired effects by incorporating structural elements of sub-wavelength sizes, i.e. features that are actually smaller than the wavelength of the waves they affect. (Wiki)

Plasmonic metamaterials are metamaterials that exploit surface plasmons, which are produced from the interaction of light with metal-dielectric materials. Under specific conditions, the incident light couples with the surface plasmons to create self-sustaining, propagating electromagnetic waves known as surface plasmon polaritons (SPPs). Once launched, the SPPs ripple along the metal-dielectric interface and do not stray from this narrow path. Compared with the incident light that triggered the transformation, the SPPs can be much shorter in wavelength. By fabricating such metamaterials fundamental limits tied to the wavelength of light are overcome. Light hitting a metamaterial is transformed into electromagnetic waves of a different variety—surface plasmon polaritons, which are shorter in wavelength than the incident light. This transformation leads to unusual and counterintuitive properties that might be harnessed for practical use. Moreover, new approaches that simplify the fabrication process of metamaterials are under development. This work also includes making new structures specifically designed to enable measurements of the materials novel properties. Furthermore, nanotechnology applications of these nanostructures are currently being researched, including microscopy beyond the diffraction limit. (Wiki)

Dusty Plasmas A dusty plasma is a plasma containing millimeter (10−3) to nanometer (10−9) sized particles suspended in it. Dust particles are charged and the plasma and particles behave as a plasma. Dust particles may form larger particles resulting in “grain plasmas”. Due to the additional complexity of studying plasmas with charged dust particles, dusty plasmas are also known as Complex Plasmas. Dusty plasmas are interesting because the presence of particles significantly alters the charged particle equilibrium leading to different phenomena. It is a field of current research. Electrostatic coupling between the grains can vary over a wide range so that the states of the dusty plasma can change from weakly coupled (gaseous) to crystalline. Such plasmas are of interest as a non-Hamiltonian system of interacting particles and as a means to study generic fundamental physics of self-organization, pattern formation, phase transitions, and scaling. (Wiki)

This brings us to the end of our exploration for now (there will be more of this Wiki series in the near future)

I hope you have enjoyed the trip. When reading the NASA LENR – Cold Fusion Patent after completing this journey, you may be surprised at the depth of your new insight. Also, please remember, when informing others about LENR – Cold Fusion Energy be sure to tell them… “Explore beyond the surface of ‘Wikipedia Cold Fusion’ and take a journey into the depths of Wiki science.”

Thanks,

Greg Goble

HEY! Visit http://lenr-canr.org/ This site features a library of papers on LENR, Low Energy Nuclear Reactions, also known as Cold Fusion. (CANR, Chemically Assisted Nuclear Reactions is another term for this phenomenon.) The library includes more than 1,000 original scientific papers reprinted with permission from the authors and publishers. The papers are linked to a bibliography of over 3,500 journal papers, news articles, and books (they even have few quality encyclopedia articles) about LENR Science and Engineering… Popularly known as ‘cold fusion’ now… Forever historically speaking that is.

NEXT

LENR Sister Field – Thermoelectric Energy

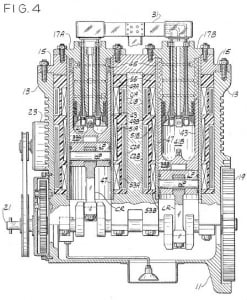

Soon I will take us back in time to the field of invention of Harold Aspden, the father of efficient thermoelectric energy devices; the likes of which power NASA deep space probes. Key words in his breakthrough patent are worth noting as they are common to the science and the environment of cold fusion phenomenon. Harold Aspden was fascinated by cold fusion as well as the biological transmutation of elements, seeing them as relevant to his field… the science of thermoelectric energy conversion.